As digital systems become increasingly embedded in everyday life, the demand for faster, more reliable, and context-aware intelligence is growing rapidly. From smart factories and autonomous vehicles to healthcare devices and cybersecurity systems, organizations are seeking ways to process data closer to where it is generated. This shift has brought Edge AI—the deployment of artificial intelligence models directly on edge devices—into sharp focus.

Edge AI represents a significant evolution in how AI systems are designed, deployed, and governed. Rather than relying solely on centralized cloud infrastructure, Edge AI enables data processing and decision-making at or near the source of data generation. This architectural change has wide-ranging implications for performance, privacy, resilience, and learning systems across industries.

Understanding Edge AI

At its core, Edge AI refers to the execution of machine learning or AI algorithms on local devices such as sensors, cameras, industrial controllers, smartphones, or on-premise gateways. These devices operate at the “edge” of the network, as opposed to centralized data centers or cloud environments.

Traditional cloud-based AI requires data to be transmitted from devices to remote servers for processing and inference. While effective in many scenarios, this approach can introduce latency, bandwidth constraints, dependency on connectivity, and data privacy concerns. Edge AI addresses these challenges by enabling local inference, often complemented by cloud-based training or orchestration.

It is important to note that Edge AI does not replace cloud AI; instead, the two often work together in a hybrid model. The cloud remains essential for large-scale model training, updates, and analytics, while the edge handles real-time, context-sensitive decisions.

Key Drivers Behind the Rise of Edge AI

Several technological and operational factors are accelerating the adoption of Edge AI:

1. Latency-sensitive applications

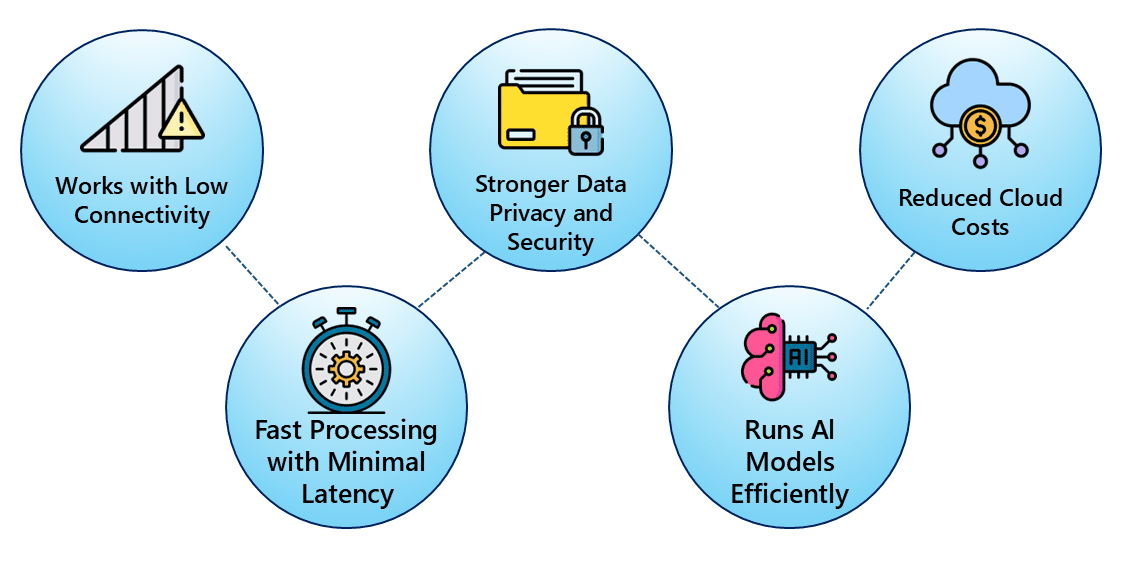

In scenarios such as autonomous driving, industrial safety systems, or medical monitoring, even milliseconds of delay can be critical. Edge AI enables near-instantaneous responses by eliminating the need for round-trip data transmission to the cloud.

2. Bandwidth and cost efficiency

With the proliferation of IoT devices, transmitting raw data continuously to the cloud can be expensive and impractical. Processing data locally reduces network load and operational costs by sending only relevant insights or aggregated data upstream.

3. Data privacy and sovereignty

Regulatory frameworks and user expectations increasingly emphasize data minimization and privacy. By keeping sensitive data on-device or within local infrastructure, Edge AI helps organizations align with privacy-by-design principles.

4. Reliability in low-connectivity environments

Edge AI systems can function independently of constant internet access, making them suitable for remote locations, critical infrastructure, and environments with unreliable connectivity.

The Technological Enablers

The feasibility of Edge AI is a recent development, hinging on some concurrent advancements across multiple technology stacks, like:

- Hardware Innovations: The development of specialized, power-efficient processors is paramount. These include Neural Processing Units (NPUs), AI accelerators, and even neuromorphic chips that mimic the brain’s architecture. These chips deliver high TOPS (Tera Operations Per Second) per watt, enabling complex model inference within the tight thermal and power budgets of edge devices.

- Algorithmic Efficiency: The AI research community has made significant strides in model optimization techniques. Methods like quantization (reducing numerical precision of model weights), pruning (removing redundant neurons), and knowledge distillation (training smaller “student” models from larger “teacher” models) have enabled the creation of compact, fast, and accurate models suitable for edge deployment. The rise of TinyML (machine learning for ultra-low-power devices) exemplifies this trend.

Applications Across Industries

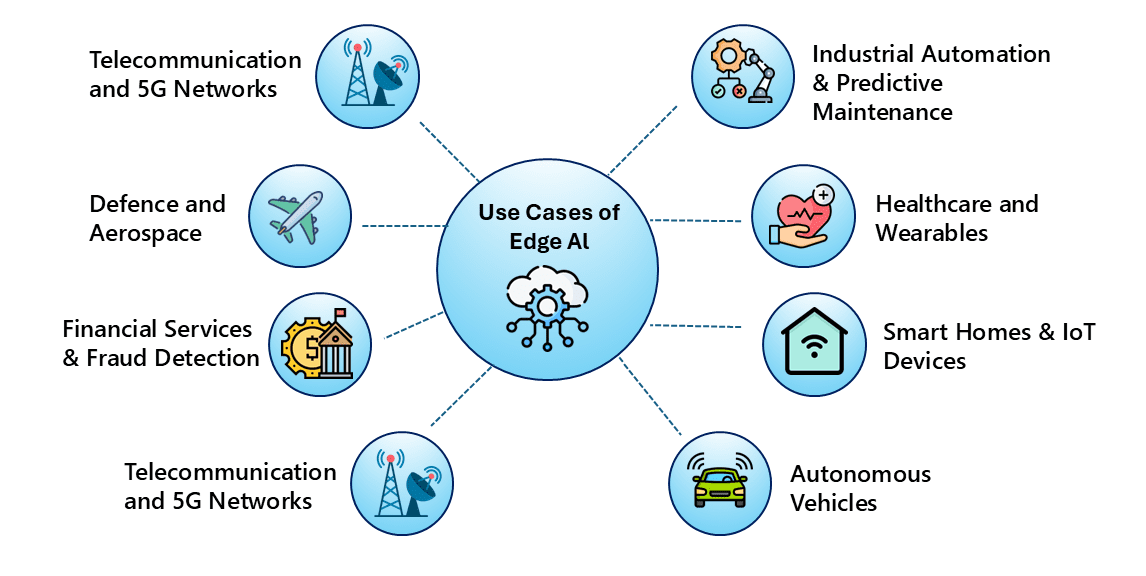

Edge AI is not a monolithic technology but a versatile capability whose value is defined by its application context. It is not limited to a single domain, its impact spans multiple sectors:

- Manufacturing and Industry

In smart factories, Edge AI enables predictive maintenance, quality inspection, and anomaly detection directly on production lines. Real-time analysis of sensor data helps reduce downtime and improve operational efficiency. - Healthcare and medical devices

Wearable devices, diagnostic equipment, and remote patient monitoring systems increasingly rely on on-device intelligence. Edge AI allows continuous analysis of health data while minimizing the transmission of sensitive patient information. - Smart cities and infrastructure

Traffic management, public safety surveillance, and energy optimization systems benefit from localized AI decision-making. Edge-based processing supports scalability and responsiveness in urban environments. - Cybersecurity and IT operations

Edge AI plays a growing role in detecting anomalies, threats, and policy violations at endpoints and network boundaries. Localized intelligence allows faster response to security events before they propagate across systems. - Education and learning technologies

In learning environments, Edge AI can support adaptive assessments, offline learning tools, and personalized feedback without constant connectivity. This is particularly relevant in regions with limited network access. - Consumer Electronics

From voice assistants in smart speakers to computational photography in smartphones, Edge AI is enhancing user experiences by making devices more intuitive, responsive, and personalized, all while keeping much of the user data on-device.

Technical Challenges and Constraints

Despite its advantages, Edge AI introduces unique challenges that require careful consideration:

1. Resource limitations

Edge devices typically have constrained compute power, memory, and energy availability. Designing efficient models through techniques such as model compression, quantization, and pruning is essential.

2. Hardware Heterogeneity

The sheer diversity of edge devices (different CPUs, NPUs, memory constraints) makes creating universally deployable AI models difficult, posing a significant software challenge.

3. Model lifecycle management

Deploying, updating, and monitoring AI models across thousands or millions of distributed devices is complex. Ensuring consistency, security, and performance over time requires robust orchestration mechanisms.

4. Security risks at the edge

While Edge AI can enhance security, edge devices themselves can become targets for tampering or exploitation. Protecting models, data, and firmware is a critical concern.

5. Standardization and interoperability

The Edge AI ecosystem spans diverse hardware platforms, frameworks, and operating environments. Lack of standardization can increase integration complexity and maintenance overhead.

Ethical and Governance Considerations

Edge AI also raises important ethical and governance questions. Decisions made locally—often autonomously—can have real-world consequences. This makes transparency, accountability, and explainability crucial, especially in sensitive domains such as healthcare, surveillance, and finance.

Furthermore, as AI moves closer to users and physical environments, biases embedded in models may manifest more directly. Responsible development practices, rigorous testing, and ongoing monitoring are essential to ensure fairness and reliability.

The Role of Edge AI in the Future AI Landscape

Looking ahead, Edge AI is expected to play a foundational role in the next phase of digital transformation. Advances in specialized AI hardware and improvements in energy-efficient computing are making edge deployments more viable at scale. At the same time, emerging paradigms like federated learning—where models are trained collaboratively across edge devices without sharing raw data—highlight how edge intelligence can contribute to collective learning while preserving privacy.

Rather than being viewed as a standalone trend, Edge AI should be understood as part of a broader architectural shift toward distributed intelligence. Organizations that thoughtfully integrate edge and cloud capabilities will be better positioned to build systems that are resilient, scalable, and aligned with real-world constraints.

For India’s tech industry, Edge AI presents a multi-faceted opportunity: developing optimized algorithms for global hardware, creating vertical-specific solutions for agriculture, manufacturing, and healthcare, and building the management and security software for distributed AI systems.

Conclusion

Edge AI marks a meaningful transition in how intelligence is embedded into digital and physical systems. By bringing computation closer to data sources, it addresses critical challenges related to latency, privacy, and reliability while unlocking new possibilities across industries.

As adoption grows, success will depend not only on technological innovation but also on responsible governance, cross-disciplinary collaboration, and a clear understanding of where edge-based intelligence adds genuine value. In this sense, Edge AI is less about moving AI away from the cloud and more about placing intelligence exactly where it is needed most.